As I wrote about last week, AI tools are igniting a self-help revolution. You no longer have to rely on people to give edits, advice, or support… bots are there to help at every turn.

I’m deeply worried about the ways that scaling self-help reinforces rugged individualism and discounts human connection.

But I’m also watching another trend: how our cultural commitment to rugged individualism shapes when people do and don’t use AI and how they judge others for using it.

Legitimate use is a live, thorny issue. Is having AI edit a homework essay considered cheating? What about brainstorming topics? When does using AI make you a pioneer at work, and when does it make you appear less competent? If AI drafts a personal note for you, is it really from you?

The answers to these questions are about so much more than AI—they are about a deeper set of value judgments and norms that determine what we count as individual creation, contribution, and success.

That’s why I was so interested to read these three recent studies:

“I think it’s important I do it myself”

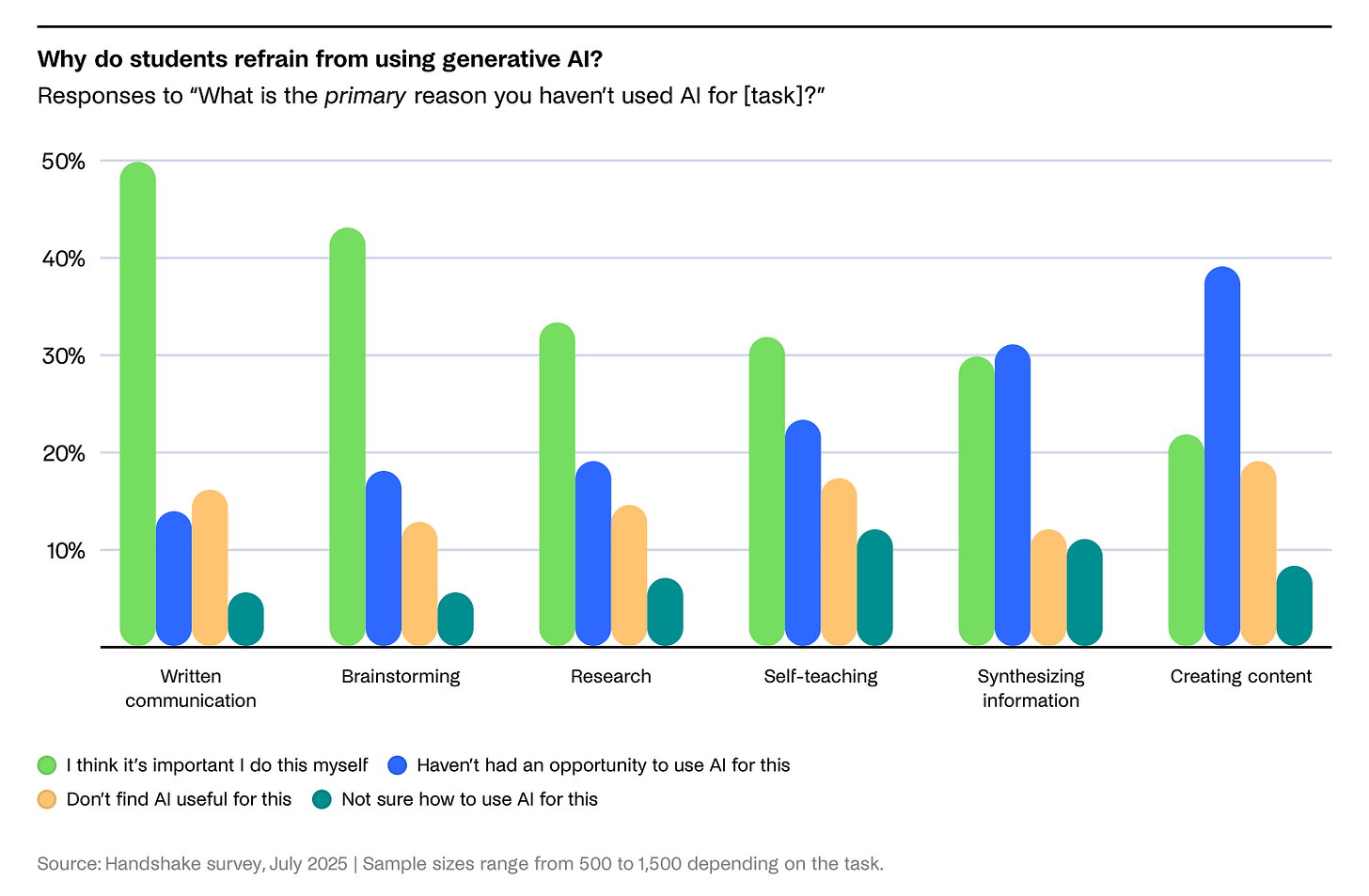

First up, a new report from Handshake, a jobs platform for college students, on how students perceive AI as both a tool and a threat. This report mostly looks at the ways students are navigating the brave new world of the AI-infused labor market. But one chart outlines the reasons students cited for not using AI across different types of tasks.

Source: Handshake, 2025

Note: these charts are about what led students to refrain from using AI, not the total rates at which they refrained.

“I think it’s important I do it myself” is a powerful—and poignant—statement. It contains both a sense of work ethic and a potential aversion to seeking out help.

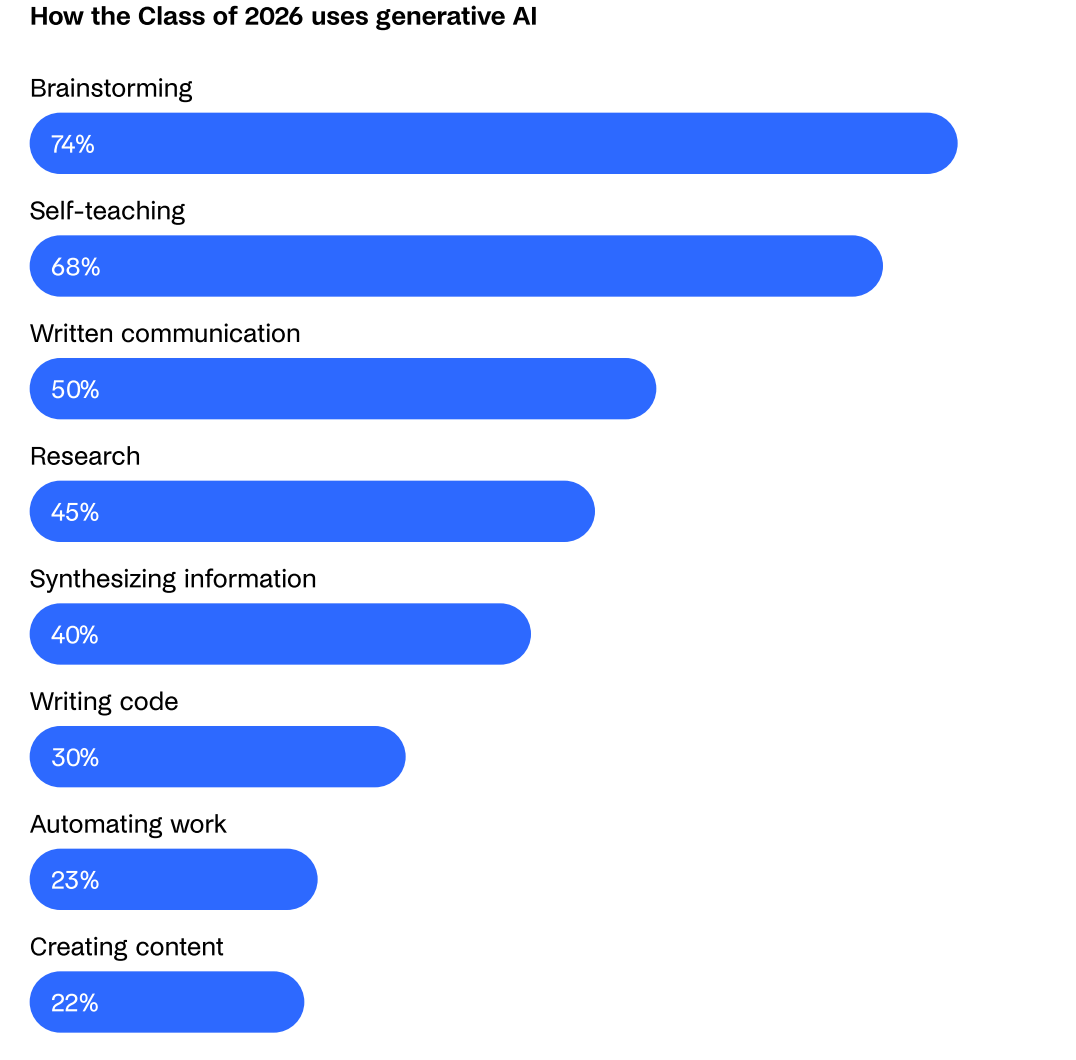

What’s equally fascinating, however, is that when Handshake asked students how they are using AI, they found almost the inverse of that chart:

Source: Handshake, 2025

In other words, the very domains where students sometimes refrain from using AI out of a commitment to DIY-ing it are the same domains where students report using the tech most frequently. Students are living proof of the cognitive dissonance of this moment–we expect a lot of ourselves… and yet we have unfettered access to tools that can do so much.

Use AI, but don’t tell me you use AI

College students aren’t the only ones sitting in the messy middle between the virtues of DIY and the upsides of AI.

A new study shows that we place enormous value on people producing their own work. In fact, disclosing you’ve used AI comes at a steep cost: trust.

In “The transparency dilemma: How AI disclosure erodes trust,” researchers undertook an elaborate series of 13 studies to reveal that disclosing that you used AI may be honorable, but it’s risky. The studies tested how disclosure impacted trust across a variety of scenarios—from students seeing that their professor used AI in grading, to hiring managers reading job applications with disclosures, to workers receiving emails from colleagues saying they were using AI assistants for scheduling.

Across all of those contexts, disclosure led to recipients questioning people’s legitimacy.

The findings have huge implications, especially given that they buck popular wisdom that greater transparency = greater trust. (One caveat on that: they found it’s better to disclose AI usage than to be exposed for having used it and not disclosed it… a highly relevant detail in the age of AI checkers).

Ironically, even more active users of AI had low trust in people who disclosed having used it. As the authors sum up, “The AI-disclosure effect highlights how individuals condemn others for using AI—even when doing so themselves.” That suggests this isn’t just about people’s attitudes about technology per se. Rather, it’s about their attitudes toward one another, and a much deeper collection of social norms around individual work and authorship.

Don’t let AI do your job

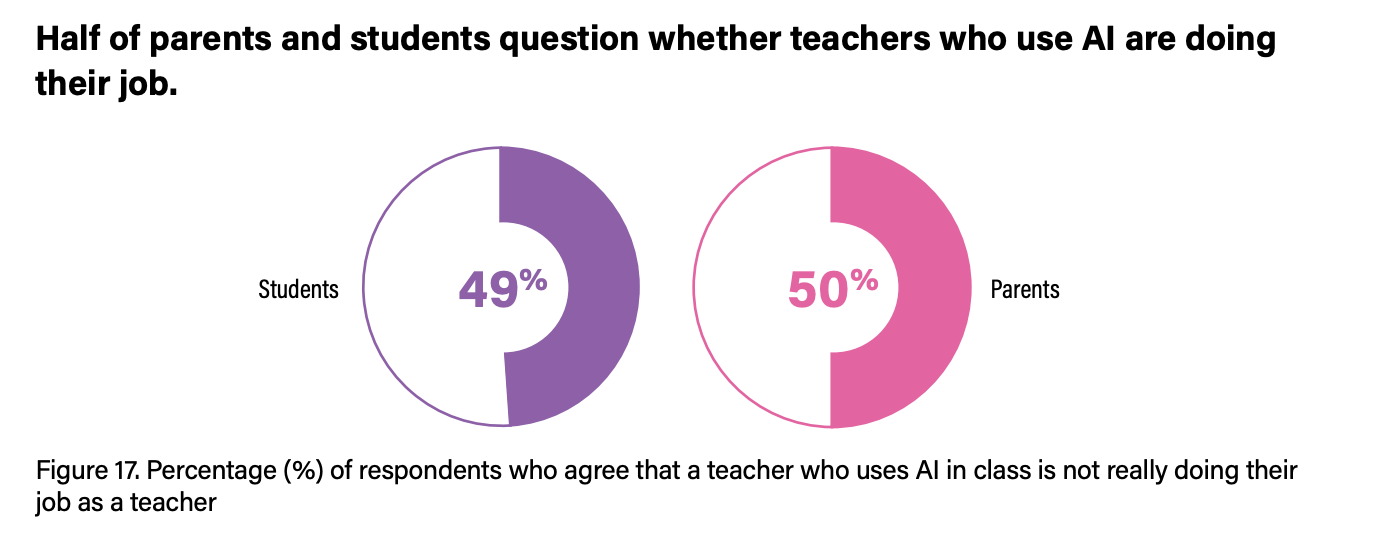

Another recent study demonstrates AI’s reputational costs in the classroom—not to students but to their teachers.

According to a new survey from the Center for Democracy & Technology, an astounding half of parents and students said that they believe a teacher who uses AI in the classroom is “not really doing their job.”

Source: Center for Democracy & Technology, 2025

This suggests that the penalty described in the transparency dilemma extends beyond just written work products and into services.

It also reveals yet another tax teachers face…in a system that rarely rewards them for doing an incredibly taxing job.

(Side note: I’d be curious to see if the same penalty holds true in healthcare? I was recently reflecting with a colleague that healthcare tools offering a more pleasant patient experience feel so much more appealing than education tools that swap tech for humans. I think that has to do with my own recent experiences being so utterly dehumanized by the healthcare system that I’d happily trade a surly human for an empathetic bot any day.)

We may judge help…but we all want (and need) it

Honestly, I don’t have a tidy takeaway based on these studies. Sometimes using AI seems truly innovative and sometimes not using it seems more authentic and virtuous. I’ve wrestled with how to frame my thinking and am not totally satisfied with where I’ve landed.

But here’s my best shot:

The fact that AI is helpful and that we also have misgivings about that help shouldn’t surprise us. In fact, it’s consistent with a long history in the analog world: humans need and rely on help, yet our culture frequently rejects or obscures that truth.

I’ve spent a decade studying how social capital—aka human help—is critical to people getting by and getting ahead.

The truth is, there’s always been a giant invisible market of informal help, like private tutors, college counselors, older siblings, friends, mentors, and sponsors that make a huge difference in people’s life trajectories. In fact, access to cross-class connections is *the* leading indicator of economic mobility. We are social creatures. Human support is a linchpin of individual achievement.

Yet we rarely talk about that fact in polite company. Indeed, in recent years, influencer culture and TED talks (and yes, even Substack 🫣) have further solidified the cult of the individual. We lionize people who do it all, and who appear to do all of it alone.

As AI starts to democratize access to help, it’s time we talk about the invisible helping hands that have always supported individual success.

That will push us to explore two aspects of “help” in this turbulent moment of technological change:

First, it could help us to clarify what constitutes “legitimate use” of AI with a far greater commitment to fairness. If some people benefit from human help without consequence to their reputation, should others be punished for relying on AI? What’s the line where the extent of or intent behind help—AI or human—compromises individual integrity?

Second, if we can have a more honest conversation about all of the help hiding behind “individual” success, we can start to soften a longstanding commitment to rugged individualism that doesn’t serve us and that leaves too many people behind. This is especially true when I think about the messages we send young people today, and the support they deserve. Yes, we should want students to work hard and to develop critical thinking and ingenuity. But we also should want students to appreciate that people rarely accomplish things alone in life, and that giving and receiving help is core to the human experience.

That could address what I think is most vulnerable at this moment: not our reputations, but our commitment to helping each other and to charting a path to collective success in the age of AI.

Disclosure: I did not use AI in writing this piece but I did have a long discussion with my husband about the differences and similarities between AI and human help, and the support of a human editor in polishing the piece.

Love this! So much to think about and hopefully TALK to other humans about! The data here shines a light on some pretty crazy truths that would serve us well wrestle with a bit more!

I like your thinking. Many successful people that the society looks up to are in their positions because of their social capital and the access they had to guidance. AI has the potential to level out the playing field. I talked to a mentoring program leader the other day who is helping the students use chatgpt as a thought partner. If it helps them with their critical thinking skills as they prepare for a homework or class project, it is actually doing the job of a good teacher many kids don't have equal access to.